1. Introduction

This document covers the installation of Red Hat 6.x Cluster software on a physical hardware.2. Assumptions

1. The base OS has been installed.2. Networking on the Data/Management NIC(s) have already been configured.

3. The servers are registered with the satellite server and subscribed to the “RHEL Server High Availability” and “RHEL Server Resilient Storage” channels. (This is required to use yum to install the cluster software.)

4. OS is patched to the current level.

5. The servers will have shared SAN storage.

6. The LUNs are already masked to the HBAs in the both servers.

7. SAN Storage is EMC and PowerPath is correctly installed.

8. There are only 2 nodes in the cluster.

NOTE: A quorum disk is required as a result of only using 2 nodes. (Quorum is required for a third vote in fencing activities.) If building more than a 2 node cluster the quorum disk can be omitted.

9. A fencing account has been created for the cluster to use.

(See Create fence ids on both servers from HP iLO)

10. All commands for the configuration will be run as root.

11. Root login must be enabled but can be disabled after build.

3. System Requirements

3.1 Servers

This document focuses on building a two node Red Hat Cluster on 2 HP BL465c G7 Blades. Additional nodes can be added at a later time if needed.The server names that will be used are;

| Server Name | Version |

| cipgbcclu1mqs01 | Red Hat 6.3 |

| cipgbcclu1mqs02 | Red Hat 6.3 |

Note: You can directly build the Red Hat 6.3 server, or you can build Red Hat 6.2, and then upgrade them to 6.3 from Satellite.

3.2 SAN Storage

Dual 4 GB HBA connected to Shared SAN storage on both servers. (the LUNs for GFS will be masked to both servers.) LVM will be used to create the logical volumes and GFS2 will be the filesystem. (GFS/GFS2 allows multiple systems to mount and use the same volumes. In this implementation EXT2 could be used as the configuration is Active/Passive however to GFS was used in the event that there may be a future need to mount the volumes on both systems.)Note: We will create the NFS service cluster over GFS2 filesystem.

In this case there will be 3x25 GB LUNs for data and an additional 20MB LUN for Quorum.

cipgvg:

Size Type lvname Mountpoint

25G GFS2 cipgvglv /mqdata/cipg

ewpvg:

Size Type lvname Mountpoint

25G GFS2 ewpvglv /mqdata/ewp

wrapvg:

Size Type lvname Mountpoint

25G GFS2 wrapvglv /mqdata/wrap

Quorum:

20M qdisk Label – CIPGmqu1-quorum

3.3 Network Interfaces

There are 2 Network Interfaces on each server, one for public, another is for private heartbeat. These are the interfaces that had to be used in our blade configuration. (Note that you may not be using the same “ethX” in your configuration. Match the MAC address with the configured VLAN in the ILO if you are unsure.)eth0 public

eth1 private heartbeat (This may not be necessary as the cluster will handle heartbeat and multicast)

3.4 IP Addresses

The addresses assigned for QA are:# iLO IPs:

10.194.2.128 node01-iLO

10.194.4.252 node02-iLO

# Data IPs:

10.192.45.80 cipgbcclu1mqs01 cipgbcclu1mqs01.tsa.RANDOM.com

10.192.45.81 cipgbcclu1mqs02 cipgbcclu1mqs02.tsa.RANDOM.com

# Heartbeat IPs:

172.16.81.60 cipglu1mqs01-hb

172.16.81.61 cipglu1mqs02-hb

# VIPs:

10.192.45.82 ewpcbcclu1mqm01 ewpcbcclu1mqm01.tsa.RANDOM.com

10.192.45.83 wrapbcclu1mqm01 wrapbcclu1mqm01.tsa.RANDOM.com

10.192.45.84 cipgbcclu1mqm01 cipgbcclu1mqm01.tsa.RANDOM.com

10.192.45.85 cipgbcclu1nfs01 cipgbcclu1nfs01.tsa.RANDOM.com

NOTE: for this NFS cluster build, we only need VIP “10.192.45.85”, other VIPs are for applications.

3.5 Services

We will create one NFS service in this cluster as per business’ requirement..

4. Install Clustering and Cluster Filesystem Packages

4.1 Required Packages

· ccs – Cluster Configuration System· clusterlib – Red Hat Cluster libraries

· cman - The Cluster Manager

· lvm2-cluster - Extensions to LVM2 to support clusters.

· kmod-gfs - gfs kernel modules

· gfs2-utils - Utilities for creating, checking, modifying, and correcting inconsistencies in GFS2 filesystems.

· rgmanager - Resource Group Manager

· luci - Conga, web interface for managing the cluster configuration and cluster state.

· ricci - listening daemon (dispatcher), reboot, rpm, storage, service and log management modules.

· OpenIPMI-tools-2.0.16-12.el6.x86_64 - Manages IPMI connections for iLOv3

4.2 Install Packages

On all servers;yum groupinstall “High Availability”

yum groupinstall “High Availability Management”

yum groupinstall “Resilient Storage”

yum install OpenIPMI*

Below is a sample of the summary that you will see of the packages and dependencies that will need to be installed. Accept the list and install. (If you receive a message that some packages are not available, you need to have the servers added to the RHEL Cluster and RHEL Cluster-Storage channels on the Satellite server.)

Transaction Summary

===========================================================================

Install XX Package(s)

Total download size: XX.X MB

Is this ok [y/N]: y

4.3 Disable selinux (Unsupported in RedHat Clusters)

On all servers;

getenforce

Disabled ßThe output from the system should be disabled from the image. If not disabled do the following;

grep SELINUX= /etc/selinux/config |grep -v “#”

Ensure that the following line is in the file;

SELINUX=disabled

4.4 Set init Level for Cluster Services (on all servers)

The cluster services need to run at level 3 (Multi-User Mode with Networking)Note: level 5 enables the service in Xwindows if it is installed. In most cases it won’t be.

chkconfig --level 01246 cman off

chkconfig --level 01246 clvmd off

chkconfig --level 01246 rgmanager off

chkconfig --level 01246 luci off

chkconfig --level 01246 ricci off

chkconfig --level 01246 gfs2 off

chkconfig --level 35 cman on

chkconfig --level 35 clvmd on

chkconfig --level 35 rgmanager on

chkconfig --level 35 luci on

chkconfig --level 35 ricci on

chkconfig --level 35 gfs2 on

4.5 Disable Unsupported Services (on all servers)

The following services will cause the cluster to malfunction and must be disabled.Note: iptables are the Linux firewall and acpid is power management.

chkconfig --level 0123456 iptables off

chkconfig --level 0123456 ip6tables off

chkconfig --level 0123456 acpid off

Reboot both servers;

Init 6

or

shutdown -r now

4.6 Service init Confirmation (on all servers)

Once the servers have rebooted, ensure that the required services have started up (and that others have not);

These services should be running. (gfs2 will return nothing on the failover node.)

service cman status (This service may not run until the cluster is built.)

service rgmanager status

service ricci status

service luci status

service clvmd status

service gfs2 status

These services should be ‘stopped’;

service iptables status

service ip6tables status

service acpid status

5. Create the cluster

NOTE: Perform the following steps on ALL SERVERS to verify that multi-path is available and to identify the physical disks on each server.

We will create the cluster using LUCI (Web Interface), and all cluster configurations will be saved in “/etc/cluster/cluster.conf” on both nodes.

.

5.1 Reset the password for “ricci” on both servers

On the both servers, reset the password for “ricci”:passwd ricci

Changing password for user ricci.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

Remember this password, so we can use it when we create the cluster from LUCI.

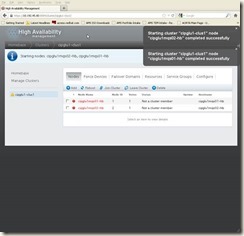

5.2 Setup the basic cluster from LUCI on first server

The following URL is to access the LUCI Web Interface on first server, we can login using the root credential.The following URL is to access the LUCI Web Interface on first server:

https://10.192.45.80:8084

Type the root credential for the first server, and then we will be able to get in:

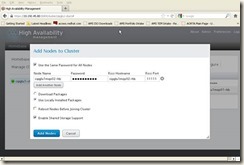

Click “Add” button, and input below info:

Cluster Name: cipglu1-clus1

Node Name: cipglu1mqs01-hb

Ricci Hostname: cipglu1mqs01-hb

Password: “ricci”s password which we reset it in section 5.1

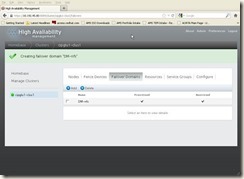

Click “Create Cluster”, will get below screen:

Click “Nodes” button, and add the second node:

Node Name: cipglu1mqs02-hb

Ricci Hostname: cipglu1mqs02-hb

Password: “ricci”s password which we reset it in section 5.1

Click “Add Nodes” button, will get below screen:

5.3 Reboot both cluster nodes

Reboot both nodes to make sure everything is ready for the clusterinit 6

6. Configuring Shared Storage on All Servers

NOTE: Perform the following steps on ALL SERVERS to verify that multi-path is available and to identify the physical disks on each server.

6.1 Determine What SAN Disk is Available

Note: Perform these steps on both/all servers so that you know what the EMC device name is on each one.powermt display dev=all

Look for the Pseudo name=emcpowerX, there should be 4 of them. (See below.)

Ensure that everything is healthy and that the paths are all alive

Pseudo name=emcpowera

CLARiiON ID=APM00120701244 [CIPGBCCLU1MQS_CLUSTER]

Logical device ID=600601602A802C005CB50BD5321AE211 [LUN 954]

state=alive; policy=CLAROpt; priority=0; queued-IOs=0;

Owner: default=SP A, current=SP A Array failover mode: 4

==============================================================================

--------------- Host --------------- - Stor - -- I/O Path -- -- Stats ---

### HW Path I/O Paths Interf. Mode State Q-IOs Errors

==============================================================================

4 qla2xxx sde SP A4 active alive 0 0

4 qla2xxx sdi SP B6 active alive 0 0

5 qla2xxx sdm SP A6 active alive 0 0

5 qla2xxx sdq SP B4 active alive 0 0

Pseudo name=emcpowerd

CLARiiON ID=APM00120701244 [CIPGBCCLU1MQS_CLUSTER]

Logical device ID=600601602A802C006478DAB3321AE211 [LUN 951]

state=alive; policy=CLAROpt; priority=0; queued-IOs=0;

Owner: default=SP A, current=SP A Array failover mode: 4

==============================================================================

--------------- Host --------------- - Stor - -- I/O Path -- -- Stats ---

### HW Path I/O Paths Interf. Mode State Q-IOs Errors

==============================================================================

4 qla2xxx sdb SP A4 active alive 0 0

4 qla2xxx sdf SP B6 active alive 0 0

5 qla2xxx sdj SP A6 active alive 0 0

5 qla2xxx sdn SP B4 active alive 0 0

Pseudo name=emcpowerc

CLARiiON ID=APM00120701244 [CIPGBCCLU1MQS_CLUSTER]

Logical device ID=600601602A802C006678DAB3321AE211 [LUN 952]

state=alive; policy=CLAROpt; priority=0; queued-IOs=0;

Owner: default=SP B, current=SP B Array failover mode: 4

==============================================================================

--------------- Host --------------- - Stor - -- I/O Path -- -- Stats ---

### HW Path I/O Paths Interf. Mode State Q-IOs Errors

==============================================================================

4 qla2xxx sdc SP A4 active alive 0 0

4 qla2xxx sdg SP B6 active alive 0 0

5 qla2xxx sdk SP A6 active alive 0 0

5 qla2xxx sdo SP B4 active alive 0 0

Pseudo name=emcpowerb

CLARiiON ID=APM00120701244 [CIPGBCCLU1MQS_CLUSTER]

Logical device ID=600601602A802C006878DAB3321AE211 [LUN 953]

state=alive; policy=CLAROpt; priority=0; queued-IOs=0;

Owner: default=SP A, current=SP A Array failover mode: 4

==============================================================================

--------------- Host --------------- - Stor - -- I/O Path -- -- Stats ---

### HW Path I/O Paths Interf. Mode State Q-IOs Errors

==============================================================================

4 qla2xxx sdd SP A4 active alive 0 0

4 qla2xxx sdh SP B6 active alive 0 0

5 qla2xxx sdl SP A6 active alive 0 0

5 qla2xxx sdp SP B4 active alive 0 0

Check the size of each disk so that you know which to use for appvg and the quorum disk.

fdisk -l /dev/emcpowera

Disk /dev/emcpowera: 20 MB, 20971520 bytes ß will be used for quorum disk

1 heads, 40 sectors/track, 1024 cylinders

Units = cylinders of 40 * 512 = 20480 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/emcpowera doesn't contain a valid partition table

ß this can be ignored

fdisk -l /dev/emcpowerb

Disk /dev/emcpowerb: 26.8 GB, 26843545600 bytes ß will be used for cipgvg

64 heads, 32 sectors/track, 25600 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x38f46538

Disk /dev/emcpowerb doesn't contain a valid partition table

ß this can be ignored

fdisk -l /dev/emcpowerc

Disk /dev/emcpowerc: 26.8 GB, 26843545600 bytes ß will be used for ewpvg

64 heads, 32 sectors/track, 25600 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x2be52caa

Disk /dev/emcpowerb doesn't contain a valid partition table

ß this can be ignored

fdisk -l /dev/emcpowerd

Disk /dev/emcpowerc: 26.8 GB, 26843545600 bytes ß will be used for wrapvg

64 heads, 32 sectors/track, 25600 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x9c41a8f5

Disk /dev/emcpowerb doesn't contain a valid partition table

ß this can be ignored

6.2 Create Mountpoints (on all servers)

Perform this step on all servers.Note: These Mountpoints and filesystems were created for a project and are being used for this example only. They have nothing to do with the functioning of a RedHat Cluster. Create whatever filesystems you need to have available to all nodes.

mkdir -p /mqdata/cipg

mkdir -p /mqdata/ewp

mkdir -p /mqdata/wrap

6.3 Prepare LVM for Cluster Locking (on all servers)

Locking prevents one system from updating a file that is opened by another system preventing file corruption.In order to enable cluster locking, run below command on both nodes;

lvmconf --enable-cluster

To verify it:

grep locking_type /etc/lvm/lvm.conf | grep -v "#"

locking_type = 3

6.4 Format the SAN disk (ON ONE SERVER ONLY)

NOTE: Only perform the following steps on ONE server.First, we need to format the disk to Linux LVM, the command and options are as below:

fdisk /dev/emcpowerb à n à p à 1 à enter à enter à t à 8e à w

The output will be as below:

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0x38f46538.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-25600, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-25600, default 25600):

Using default value 25600

Command (m for help): t

Selected partition 1

Hex code (type L to list codes): 8e

Changed system type of partition 1 to 8e (Linux LVM)

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

Verify the new formatted disk:

fdisk –l /dev/emcpowerb

Disk /dev/emcpowerb: 26.8 GB, 26843545600 bytes

64 heads, 32 sectors/track, 25600 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x38f46538

Device Boot Start End Blocks Id System

/dev/emcpowerb1 1 25600 26214384 8e Linux LVM

6.5 Create cipgvg Volume Group (ON ONE SERVER ONLY)

NOTE: Only perform the following steps on ONE server.First, we need to format the all SAN disks:

pvcreate /dev/emcpowerb1

Writing physical volume data to disk "/dev/emcpowerb1"

Physical volume "/dev/emcpowerb1" successfully created

Create the cipgvg Volume Group

vgcreate -c y cipgvg /dev/emcpowerb1

Clustered volume group "cipgvg" successfully created

6.6 Create Logical Volumes (ON ONE SERVER ONLY)

In order to create Logical Volume with fully capacity of the VG, we need to get the “total PE” number from vgdisplay command:vgdisplay cipgvg | grep “Total PE”

Total PE 6399

Logical Volume will be created for each of the filesystem.

lvcreate –l 6399 -n cipgvglv cipgvg

Logical volume "cipgvglv" created

6.7 Create other VGs/LVMs (ON ONE SERVER ONLY)

Repeat the step 6.4 to 6.6 to create other VGs/LVMs:/dev/emcpowerc à ewpvg à ewpvglv

/dev/emcpowerd à wrapvg à wrapvglv

6.8 Verify all VGs/LVMs (ON ONE SERVER ONLY)

So finally we will have below VGs/LVMs:pvs | grep emc

/dev/emcpowerb1 cipgvg lvm2 a-- 25.00g 0

/dev/emcpowerc1 ewpvg lvm2 a-- 25.00g 0

/dev/emcpowerd1 wrapvg lvm2 a-- 25.00g 0

vgs | egrep “cipg|ewp|wrap”

cipgvg 1 1 0 wz--nc 25.00g 0

ewpvg 1 1 0 wz--nc 25.00g 0

wrapvg 1 1 0 wz--nc 25.00g 0

lvs | egrep “cipg|ewp|wrap”

cipgvglv cipgvg -wi-ao-- 25.00g

ewpvglv ewpvg -wi-ao-- 25.00g

wrapvglv wrapvg -wi-ao-- 25.00g

6.9 Create gfs2 Filesystems (ON ONE SERVER ONLY)

Note: -j 2 is for the number of journals. In this case 2 are required because it’s a 2 node cluster. If your cluster has more than 2 nodes change this number to suit.

mkfs.gfs2 -t cipglu1-clus1:cipgvglv -j 2 -p lock_dlm /dev/cipgvg/cipgvglv

mkfs.gfs2 -t cipglu1-clus1:ewpvglv -j 2 -p lock_dlm /dev/ewpvg/ewpvglv

mkfs.gfs2 -t cipglu1-clus1:wrapvglv -j 2 -p lock_dlm /dev/wrapvg/wrapvglv

The output is as below for the first filesystem, others are similar:

This will destroy any data on /dev/cipgvg/cipgvglv.

It appears to contain: symbolic link to `../dm-11'

Are you sure you want to proceed? [y/n] y

Device: /dev/cipgvg/cipgvglv

Blocksize: 4096

Device Size 25.00 GB (6552576 blocks)

Filesystem Size: 25.00 GB (6552576 blocks)

Journals: 2

Resource Groups: 100

Locking Protocol: "lock_dlm"

Lock Table: "cipglu1-clus1:cipgvglv"

UUID: 8cfcb279-725f-6ace-4793-12f123c9e69e

Are you sure you want to proceed? [y/n] y

6.10 Add GFS Workaround to TSM Configuration (ALL SERVERS)

We need to modify the /opt/tivoli/tsm/client/ba/bin/dsm.sys file, and add below lines at the end:### GFS2 backup:

VIRTUALMOUNTPOINT /mqdata/cipg

VIRTUALMOUNTPOINT /mqdata/wrap

VIRTUALMOUNTPOINT /mqdata/ewp

6.11 Create Quorum Disk (ON ONE SERVER ONLY)

NOTE: Make sure that you create your quorum on the proper emcpowerX disk!mkqdisk -c /dev/emcpowera -l CIPGmqu1-quorum

mkqdisk v3.0.12.1

Writing new quorum disk label 'CIPGmqu1-quorum' to /dev/emcpowera.

WARNING: About to destroy all data on /dev/emcpowera; proceed [N/y] ? y

Initializing status block for node 1...

Initializing status block for node 2...

Initializing status block for node 3...

Initializing status block for node 4...

Initializing status block for node 5...

Initializing status block for node 6...

Initializing status block for node 7...

Initializing status block for node 8...

Initializing status block for node 9...

Initializing status block for node 10...

Initializing status block for node 11...

Initializing status block for node 12...

Initializing status block for node 13...

Initializing status block for node 14...

Initializing status block for node 15...

Initializing status block for node 16...

6.12 Check Filesystem Status (on both servers)

We might have to scan the new PV/VG/LVM on second cluster nodes, if scan is not working, then we might have to reboot it.To scan the SAN disks on second server, we might run below commands:

pvscan

PV /dev/emcpowerd1 VG wrapvg lvm2 [25.00 GiB / 0 free]

PV /dev/emcpowerc1 VG ewpvg lvm2 [25.00 GiB / 0 free]

PV /dev/emcpowerb1 VG cipgvg lvm2 [25.00 GiB / 0 free]

PV /dev/sda3 VG systemvg lvm2 [29.97 GiB / 0 free]

PV /dev/sda2 VG rootvg lvm2 [29.97 GiB / 15.97 GiB free]

vgscan

Reading all physical volumes. This may take a while...

Found volume group "wrapvg" using metadata type lvm2

Found volume group "ewpvg" using metadata type lvm2

Found volume group "cipgvg" using metadata type lvm2

Found volume group "systemvg" using metadata type lvm2

Found volume group "rootvg" using metadata type lvm2

lvscan

ACTIVE '/dev/wrapvg/wrapvglv' [25.00 GiB] inherit

ACTIVE '/dev/ewpvg/ewpvglv' [25.00 GiB] inherit

ACTIVE '/dev/cipgvg/cipgvglv' [25.00 GiB] inherit

ACTIVE '/dev/systemvg/systemlv' [29.97 GiB] inherit

ACTIVE '/dev/rootvg/rootlv' [1.00 GiB] inherit

ACTIVE '/dev/rootvg/tmplv' [1.00 GiB] inherit

ACTIVE '/dev/rootvg/usrlv' [2.50 GiB] inherit

ACTIVE '/dev/rootvg/homelv' [1.00 GiB] inherit

ACTIVE '/dev/rootvg/usrlocallv' [512.00 MiB] inherit

ACTIVE '/dev/rootvg/optlv' [1.00 GiB] inherit

ACTIVE '/dev/rootvg/varlv' [2.00 GiB] inherit

ACTIVE '/dev/rootvg/bmcappslv' [2.00 GiB] inherit

ACTIVE '/dev/rootvg/crashlv' [2.00 GiB] inherit

ACTIVE '/dev/rootvg/swaplv' [1.00 GiB] inherit

To verify all PV/VG/LVM, we can run below commands on both nodes:

pvs

PV VG Fmt Attr PSize PFree

/dev/emcpowerb1 cipgvg lvm2 a-- 25.00g 0

/dev/emcpowerc1 ewpvg lvm2 a-- 25.00g 0

/dev/emcpowerd1 wrapvg lvm2 a-- 25.00g 0

/dev/sda2 rootvg lvm2 a-- 29.97g 15.97g

/dev/sda3 systemvg lvm2 a-- 29.97g 0

vgs

VG #PV #LV #SN Attr VSize VFree

cipgvg 1 1 0 wz--nc 25.00g 0

ewpvg 1 1 0 wz--nc 25.00g 0

wrapvg 1 1 0 wz--nc 25.00g 0

rootvg 1 10 0 wz--n- 29.97g 15.97g

systemvg 1 1 0 wz--n- 29.97g 0

lvs

LV VG Attr LSize

cipgvglv cipgvg -wi-ao-- 25.00g

ewpvglv ewpvg -wi-ao-- 25.00g

wrapvglv wrapvg -wi-ao-- 25.00g

bmcappslv rootvg -wi-ao-- 2.00g

crashlv rootvg -wi-ao-- 2.00g

homelv rootvg -wi-ao-- 1.00g

optlv rootvg -wi-ao-- 1.00g

rootlv rootvg -wi-ao-- 1.00g

swaplv rootvg -wi-ao-- 1.00g

tmplv rootvg -wi-ao-- 1.00g

usrlocallv rootvg -wi-ao-- 512.00m

usrlv rootvg -wi-ao-- 2.50g

varlv rootvg -wi-ao-- 2.00g

systemlv systemvg -wi-ao-- 29.97g

6.13 Check the Quorum Disk

We can run the following command on both nodes to check the quorum disk.

mkqdisk -L

mkqdisk v3.0.12.1

/dev/block/120:0:

/dev/emcpowera:

Magic: eb7a62c2

Label: CIPGmqu1-quorum

Created: Wed Oct 24 15:25:19 2012

Host: cipgbcclu1mqs01

Kernel Sector Size: 512

Recorded Sector Size: 512

/dev/block/8:64:

/dev/disk/by-path/pci-0000:08:00.0-fc-0x5006016446e04bab-lun-3:

/dev/sde:

Magic: eb7a62c2

Label: CIPGmqu1-quorum

Created: Wed Oct 24 15:25:19 2012

Host: cipgbcclu1mqs01

Kernel Sector Size: 512

Recorded Sector Size: 512

/dev/block/8:128:

/dev/disk/by-path/pci-0000:08:00.0-fc-0x5006016e46e04bab-lun-3:

/dev/sdi:

Magic: eb7a62c2

Label: CIPGmqu1-quorum

Created: Wed Oct 24 15:25:19 2012

Host: cipgbcclu1mqs01

Kernel Sector Size: 512

Recorded Sector Size: 512

/dev/block/8:192:

/dev/disk/by-path/pci-0000:08:00.1-fc-0x5006016646e04bab-lun-3:

/dev/sdm:

Magic: eb7a62c2

Label: CIPGmqu1-quorum

Created: Wed Oct 24 15:25:19 2012

Host: cipgbcclu1mqs01

Kernel Sector Size: 512

Recorded Sector Size: 512

/dev/block/65:0:

/dev/disk/by-id/scsi-3600601602a802c005cb50bd5321ae211:

/dev/disk/by-id/wwn-0x600601602a802c005cb50bd5321ae211:

/dev/disk/by-path/pci-0000:08:00.1-fc-0x5006016c46e04bab-lun-3:

/dev/sdq:

Magic: eb7a62c2

Label: CIPGmqu1-quorum

Created: Wed Oct 24 15:25:19 2012

Host: cipgbcclu1mqs01

Kernel Sector Size: 512

Recorded Sector Size: 512

6.14 Configure hosts Files (on all servers)

On all servers; add the host entries so that each server is aware of the other and the VIP.In this case, the following lines need to be added

# Data IPs:

10.192.45.80 cipgbcclu1mqs01 cipgbcclu1mqs01.tsa.RANDOM.com

10.192.45.81 cipgbcclu1mqs02 cipgbcclu1mqs02.tsa.RANDOM.com

# VIPs:

10.192.45.82 ewpcbcclu1mqm01 ewpcbcclu1mqm01.tsa.RANDOM.com

10.192.45.83 wrapbcclu1mqm01 wrapbcclu1mqm01.tsa.RANDOM.com

10.192.45.84 cipgbcclu1mqm01 cipgbcclu1mqm01.tsa.RANDOM.com

10.192.45.85 cipgbcclu1nfs01 cipgbcclu1nfs01.tsa.RANDOM.com

# iLO IPs:

10.194.2.128 node01-iLO

10.194.4.252 node02-iLO

# Heartbeat IPs:

172.16.81.60 cipglu1mqs01-hb

172.16.81.61 cipglu1mqs02-hb

7. Configure the Cluster

We can use the following URL is to access the LUCI Web Interface on first server with root credential:https://10.192.45.80:8084

7.1 Add the quorum disk to cluster

Click the “Configure à QDisk” Tab from cluster LUCI web interface, and input below information for quorum disk configuration:By Device Label: CIPGmqu1-quorum

And click “Apply” button, will get below screen:

7.2 Create/configure Fence Devices for both nodes

We will need to create one Fence Device on each node.7.2.1 Create fence ids on both servers from HP iLO

We need to create the fence ids on both servers from HP iLO.Login to iLO console web page by using https://iLO_ip on first server, from “Administration à User Administration à New”, and input below information, then click “Add user” button:

User Information:

User Name: fence1

Login Name: fence1

Password: xxxxxxxx

Password Confirm: xxxxxxxx

User permissions:

We have to select all below privileges:

Administer User Accounts

Remote Console Access

Virtual Power and Reset

Virtual Power and Reset

Virtual Media

Configure iLO Settings

Repeat above steps to create “fence2” iLO id on second node’s iLO console web page.

7.2.2 Create Fence Devices for first node

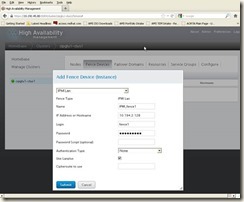

Click the “Fence Devices” Tab from cluster LUCI web interface, and then click “Add”Click the “Fence Devices” Tab from cluster LUCI web interface, and then click “Add” button, in new window “Add Fence Device (instance)” à Select a Fence Device à IPMI Lan, and then input below information for the first node Fence Device configuration:

Name: IPMI_fence1

IP Address or Hostname: 10.194.2.128 (iLO IP of the first node)

Login: fence1 (iLO id created from section 7.2.1)

Password: password of fence1 id;

Click “Submit” button, will get below screen:

7.2.3 Create Fence Devices for second node

We have to repeat the same procedure as 7.2.2 to create second node Fence Device.Name: IPMI_fence2

IP Address or Hostname: 10.194.4.252 (iLO IP of the second node)

Login: fence2 (iLO id created from section 7.2.1)

Password: password of fence2 id;

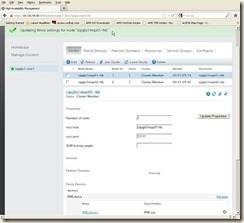

Once it’s done, we will get below screen:

7.2.4 Add Fence Device to the first node

Click the “Nodes” Tab from cluster LUCI web interface, and then click first node name “cipglu1mqs01-hub”:

Click the “Add Fence Method” button; input “IPMI_fence” as Method Name:

And then click “Submit” button, in new window “Add Fence Device (Instance)”, select “IPMI_fence1 (IPMI Lan):

And then click “Submit” button, we will get below screen:

7.2.5 Add Fence Device to the second node

Repeat the same procedure as 7.2.4 to add “IPMI_fence2” to second node “cipglu1mqs02-hb”, once it’s done, we will get below screen:

7.3 Create resources for cluster

As we’re building the NFS v4 cluster here, so we need to make sure that we have the latest NFS packages, also we will create the following resources for this cluster:- IP resource;

- GFS2 resources;

- NFS Export resources;

- NFS Client resources;

7.3.1 Update the NFS package

We have to verify the NFS package, as older versions have some bugs for NFS v4, to verify the NFS package version:rpm –qa | grep –i nfs-utils

nfs-utils-1.2.3-26.el6.x86_64

nfs-utils-lib-1.1.5-4.el6.x86_64

So we have to upgrade it to “nfs-utils-1.2.3-34.el6.x86_64.rpm” or up. Once get this package from Red Hat, then run below command to install/update it:

rpm -Uvh nfs-utils-1.2.3-34.el6.x86_64.rpm

7.3.2 Create IP resource for cluster

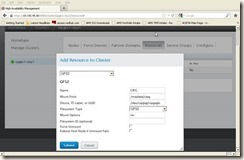

Click the “Resources” Tab from cluster LUCI web interface, and then click “Add” button, in “Add Resource to Cluster à Select a Resource Type”, select “IP Address”, and input below info:IP Address: 10.192.45.85 (VIP for NFS service)

Netmask Bits: 255.255.254.0

And click “Submit” button, we will get below screen:

7.3.3 Create GFS2 resources for cluster

We need to create the CIPG, EWP and WRAP GFS2 resources for this cluster.7.3.3.1 Create CIPG GFS2 resources for cluster

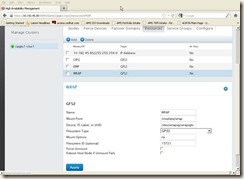

Click the “Resources” Tab from cluster LUCI web interface, and then click “Add” button, in “Add Resource to Cluster à Select a Resource Type”, select “GFS2”, and input below info:Name: CIPG

Mount point: /mqdata/cipg

Device, FS Label, or UUID: /dev/cipgvg/cipgvglv

Filesystem Type: GFS2

Mount Options: rw

And click “Submit” button to create the first CIPG GFS2 resource, we will get below screen:

7.3.3.2 Create EWP GFS2 resources for cluster

Repeated the same procedure as 7.3.3.1 to create other GFS2 resources for EWP:EWP GFS2 resource:

Name: EWP

Mount point: /mqdata/ewp

Device, FS Label, or UUID: /dev/ewpvg/ewpvglv

Filesystem Type: GFS2

Mount Options: rw

7.3.3.3 Create WRAP GFS2 resources for cluster

Repeated the same procedure as 7.3.3.1 to create other GFS2 resources for WRAP:WRAP GFS2 resource:

Name: EWP

Mount Point: /mqdata/wrap

Device, FS label, or UUID: /dev/ewp/wrapvglv

Filesystem Type: GFS2

Mount Options: rw

7.3.3.4 Verify all GFS2 resources for cluster

Finally, we will have below three GFS2 resources for CIPG, EWP and WRAP:

7.3.4 Create NFS Export resources for cluster

We need to create the CIPG, EWP and WRAP NFS Export resources for this cluster:7.3.4.1 Create CIPG NFS Export resource for cluster

Click the “Resources” Tab from cluster LUCI web interface, and then click “Add” button, in “Add Resource to Cluster à Select a Resource Type”, select “NFS v3 Export”, and input below info:Name: CIPG-export

And click “Submit” button to create the first CIPG NFS Export resource, then we will get below screen:

7.3.4.2 Create EWP NFS Export resource for cluster

Repeated the same procedure as 7.3.4.1 to create other EWP NFS Export resource for EWP:EWP NFS Export resource:

Name: EWP-export

7.3.4.3 Create WRAP NFS Export resource for cluster

Repeated the same procedure as 7.3.4.1 to create other EWP NFS Export resource for WRAP:WRAP NFS Export resource:

Name: WRAP-export

7.3.4.4 Verify all NFS Export resources:

Finally, we will have below three NFS Export resources:

7.3.5 Create NFS Client resources for cluster

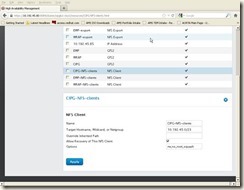

We need to create the CIPG, EWP and WRAP NFS Client resources for this cluster:7.3.5.1 Create CIPG NFS Client resource for cluster

Click the “Resources” Tab from cluster LUCI web interface, and then click “Add” button, in “Add Resource to Cluster à Select a Resource Type”, select “NFS Client”, and input below info, and click “Submit” button to create the first CIPG NFS Export resource:Name: CIPG-NFS-clients

Target Hostname, Wildcard, or Netgroup: 10.192.45.0/23

Options: rw, no_root_squash

7.3.5.2 Create EWP NFS Client resource for cluster

Repeated the same procedure as 7.3.5.1 to create other EWP NFS Client resource for EWP:Name: EWP-NFS-clients

Target Hostname, Wildcard, or Netgroup: 10.192.45.0/23

Options: rw, no_root_squash

7.3.5.3 Create WRAP NFS Client resource for cluster

Repeated the same procedure as 7.3.5.1 to create other WRAP NFS Client resource for WRAP:Name: EWP-NFS-clients

Target Hostname, Wildcard, or Netgroup: 10.192.45.0/23

Options: rw, no_root_squash

7.3.5.4 Verify all NFS Client resource for cluster

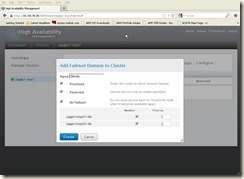

7.4 Create failover domain for cluster

Click the “Failover Domains” Tab from cluster LUCI web interface, and then click “Add” button, and input below info:Name: DM-nfs

Prioritized: checked;

Restricted: checked;

No Failback: checked;

Members: Members Priority

cipglu1mqs01-hb checked 1

cipglu1mqs02-hb checked 2

And click “Create” button, then we will get below screen.

7.5 Create/configure NFS service for cluster

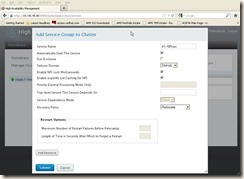

We will create the NFS service group, and then add the all resources to this NFS service group.7.5.1 Create NFS service group for cluster

Click the “Service Groups” Tab from cluster LUCI web interface, and then click “Add” button, and input below info:Service Name: U1-NFSsvc

Failover Domain: DM-nfs

Recovery Policy: Relocate

And click “Submit” button, then will get below screen:

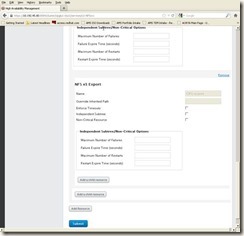

7.5.2 Add all resources to NFS service group

We will need to add all resources to NFS service group.7.5.2.1 Add IP resource to NFS service group

Click the “Add Resource” button at the left bottom from U1-NFSsvc service group page, in new “Add Resource to Service à Select a Resource Type” window, select pre-created IP resource “10.192.45.85”:

7.5.2.2 Add CIPG GFS2 resource to NFS service group

Click the “Add Resource” button at the left bottom from U1-NFSsvc service group page, in new “Add Resource to Service à Select a Resource Type” window, select pre-created CIPG GFS2 resource “CIPG”:

7.5.2.3 Add CIPG NFS Export resource to NFS service group

Click the “Add a child resource” button at the bottom of the CIPG GFS2 resource (see screen at 7.5.2.2, in new window “Add Resource to Service à Select a Resource Type”, select pre-created CIPG NFS Export resource “CIPG-export”:

7.5.2.4 Add CIPG NFS Client resource to NFS service group

Click the “Add a child resource” button at the bottom of the CIPG v3 Export resource (see screen at 7.5.2.3), in new window “Add Resource to Service à Select a Resource Type”, select pre-created CIPG NFS Client resource “CIPG-NFS-clients”:

7.5.2.5 Add EWP GFS2/NFS Export/NFS Clients resources to NFS service group

Repeat the steps as per 7.5.2.2 to 7.5.2.4 to add EWP GFS2/NFS Export/NFS Clients resources to NFS service group.7.5.2.6 Add WRAP GFS2/NFS Export/NFS Clients resources to NFS service group

Repeat the steps as per 7.5.2.2 to 7.5.2.4 to add WRAP GFS2/NFS Export/NFS Clients resources to NFS service group.7.6 Restart the cluster

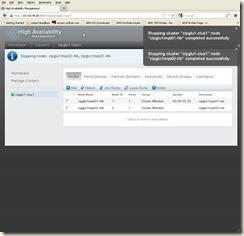

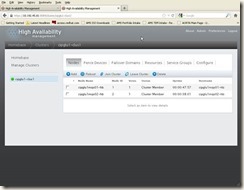

In order to restart the cluster from LUCI web interface, we have to do the following:

Click the “Nodes” Tab from cluster LUCI web interface, and then select both nodes, then click “Leave Cluster”, once it’s completed, we will see below screen once the cluster is stopped successfully:

Then we can click “Join Cluster”, once it’s completed and cluster is started, then we will see below screen:

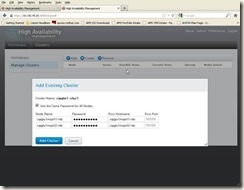

7.7 Configure LUCI on second server

The following URL is to access the LUCI Web Interface on second server:

https://10.192.45.81:8084

Type the root credential for the second server, and click the “Add” button, then input below information to add existing cluster:

Click “Add Cluster” button, then we get the following screen, so we have added this cluster on second server via LUCI successfully.

8. To test the Cluster failover

We need to perform all the following steps on both servers, but not at the same time.We can perform a step on one node and verify the cluster, once the cluster has been verified healthy, and then we can perform the step on the other node. Then move on to the next test.

We can always use “clustat” or the LUCI web interface to verify the cluster state and active node.

Before starting each step, all cluster “Members” should be Online and cluster state active.

8.1 “fence_node” test

Determine which node is the active node.clustat

| Cluster Status for cipglu1-clus1 @ Wed Nov 14 15:37:12 2012 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ cipglu1mqs01-hb 1 Online, Local, rgmanager cipglu1mqs02-hb 2 Online, rgmanager /dev/block/120:0 0 Online, Quorum Disk Service Name Owner (Last) State ------- ---- ----- ------ ----- service:U1-NFSsvc cipglu1mqs02-hb started |

In this case, the active node is the second server.

To monitor the cluster as the failover occurs, we can tail the messages to get the real time logs by log on both nodes in a separate window.

tail -f /var/log/messages

To fence the node2 (current active node), run below command on node1:

fence_node cipglu1mqs02-hb

| fence cipglu1mqs02-hb success |

So we will see the second node was fenced (rebooted), and on the first node, run below command, and make sure that U1-NFSsvc has been failed over to the first node:

clustat

| Cluster Status for cipglu1-clus1 @ Wed Nov 14 16:30:23 2012 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ cipglu1mqs01-hb 1 Online, Local, rgmanager cipglu1mqs02-hb 2 Offline /dev/block/120:0 0 Online, Quorum Disk Service Name Owner (Last) State ------- ---- ----- ------ ----- service:U1-NFSsvc cipglu1mqs01-hb started |

Repeat the “fence_node” test on the remaining node.

8.2 “Power Off” test

Log into the iLO for the active node and power it off.From iLO web page select “Power Management à Server Power à Press and Hold”, this will use the virtual power switch to force a power off for this server. The service and resources will be failed over to the remaining node.

You can reference 8.1 and use “clustat” and “tail –f /var/log/messages” to watch/verify the real time activities.

To continue with the next test, both nodes must be cluster members (Online).

Repeat the “Power Off” test on the remaining node.

8.3 “Reboot” test

Determine which node is the active node by “clustat” command.Reboot the active node.

init 6

or

shutdown -r now

This will initiate an orderly shutdown of the current node. The service and resources will be failed over to the remaining node. When this node comes back, it should automatically rejoin the cluster.

You can reference 8.1 and use “clustat” and “tail –f /var/log/messages” to watch/verify the real time activities.

To continue with the next test, both nodes must be cluster members (Online).

Repeat the “Reboot” test on the remaining node.

8.4 “Network Down” test

We will do two kind of “Network Down” test, one is for public network, and another one is for heartbeat network.8.4.1 “Public NIC Down” Test

Determine which node is the active node by “clustat” command.Disable the public NIC on active node:

ifconfig eth0 down

Then the NFS service will be failed over to another node as expected, you can reference 8.1 and use “clustat” and “tail –f /var/log/messages” to watch/verify the real time activities

To continue with the next test, both nodes must be cluster members (Online).

Repeat the “Public NIC Down” test on the remaining node.

8.4.2 “Heartbeat NIC Down” Test

Determine which node is the active node by “clustat” command.Disable the heartbeat NIC on active node:

ifconfig eth1 down

Then the other node (standby node) will be fenced (rebooted) as expected, NFS service will be still running on current active node, you can reference 8.1 and use “clustat” and “tail –f /var/log/messages” to watch/verify the real time activities

Once the other node is up, it will not be able to join the cluster automatically, so we need to manually join it from LUCI, or run below commands to manually start the cluster services:

service cman restart

service clvmd restart

service rgmanager restart

service ricci restart

service luci restart

service qdiskd restart

service gfs2 restart

To continue with the next test, both nodes must be cluster members (Online).

Repeat the “Heartbeat NIC Down” test on the remaining node.

8.5 “Fibre-channel Failure” test

Determine which node is the active node by “clustat” command.We need to identify the HBA names on the active node by using below command:

powermt display hba_mode

| Symmetrix logical device count=0 CLARiiON logical device count=4 Hitachi logical device count=0 HP xp logical device count=0 Ess logical device count=0 Invista logical device count=0 ============================================================================== ----- Host Bus Adapters --------- ------ I/O Paths ----- Stats ### HW Path Summary Total Dead Q-IOs Mode ============================================================================== 4 qla2xxx optimal 8 0 0 Enabled 5 qla2xxx optimal 8 0 0 Enabled |

The HBAs are 4 and 5 for this server, so we will disable it by using below commands:

powermt disable hba=4

powermt disable hba=5

Then the current active node will be fenced (rebooted) as per expected, you can reference 8.1 and use “clustat” and “tail –f /var/log/messages” to watch/verify the real time activities, once it’s up, then it will be automatically joined to the cluster.

To continue with the next test, both nodes must be cluster members (Online).

Repeat the “Fibre-channel Failure” test on the remaining node.