In the past few days, some of my Pods kept on crashing and OS Syslog shows the OOM killer kills the container process. I did some research to find out how these thing works.

Pod memory limit and cgroup memory settings

Let's test on K3s. Create a pod setting the memory limit to 123Mi, a number that can be recognized easily.kubectl run --restart=Never --rm -it --image=ubuntu --limits='memory=123Mi' -- sh If you don't see a command prompt, try pressing enter. root@sh:/#In another shell, find out the uid of the pods,

kubectl get pods sh -o yaml | grep uid uid: bc001ffa-68fc-11e9-92d7-5ef9efd9374cAt the server where the pod is running, check the cgroup settings based on the uid of the pods,

cd /sys/fs/cgroup/memory/kubepods/burstable/podbc001ffa-68fc-11e9-92d7-5ef9efd9374ccat memory.limit_in_bytes 128974848The number 128974848 is exact 123Mi (123*1024*1024). So its more clear now, Kubernetes set the memory limit through cgroup. Once the pod consume more memory than the limit, cgroup will start to kill the container process.

Stress test

Let's install the stress tools on the Pod through the opened shell session.root@sh:/# apt update; apt install -y stressIn the meantime, monitoring the Syslog by running

dmesg -TwRun the stress tool with the memory within the limit 100M first. It’s launched successfully.

root@sh:/# stress --vm 1 --vm-bytes 100M & [1] 271 root@sh:/# stress: info: [271] dispatching hogs: 0 cpu, 0 io, 1 vm, 0 hddNow trigger the second stress test,

root@sh:/# stress --vm 1 --vm-bytes 50M stress: info: [273] dispatching hogs: 0 cpu, 0 io, 1 vm, 0 hdd stress: FAIL: [271] (415) <-- worker 272 got signal 9 stress: WARN: [271] (417) now reaping child worker processes stress: FAIL: [271] (451) failed run completed in 7sThe first stress process (process id 271) was killed immediately with the signal 9.

In the meantime, the syslogs shows

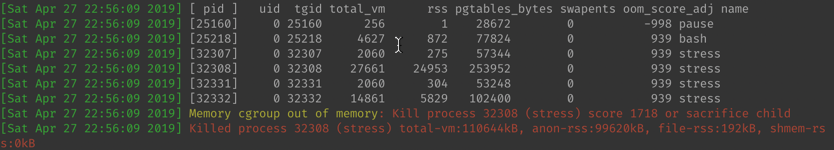

[Sat Apr 27 22:56:09 2020] stress invoked oom-killer: gfp_mask=0x14000c0(GFP_KERNEL), nodemask=(null), order=0, oom_score_adj=939 [Sat Apr 27 22:56:09 2020] stress cpuset=a2ed67c63e828da3849bf9f506ae2b36b4dac5b402a57f2981c9bdc07b23e672 mems_allowed=0 [Sat Apr 27 22:56:09 2020] CPU: 0 PID: 32332 Comm: stress Not tainted 4.15.0-46-generic #49-Ubuntu [Sat Apr 27 22:56:09 2020] Hardware name: BHYVE, BIOS 1.00 03/14/2014 [Sat Apr 27 22:56:09 2020] Call Trace: [Sat Apr 27 22:56:09 2020] dump_stack+0x63/0x8b [Sat Apr 27 22:56:09 2020] dump_header+0x71/0x285 [Sat Apr 27 22:56:09 2020] oom_kill_process+0x220/0x440 [Sat Apr 27 22:56:09 2020] out_of_memory+0x2d1/0x4f0 [Sat Apr 27 22:56:09 2020] mem_cgroup_out_of_memory+0x4b/0x80 [Sat Apr 27 22:56:09 2020] mem_cgroup_oom_synchronize+0x2e8/0x320 [Sat Apr 27 22:56:09 2020] ? mem_cgroup_css_online+0x40/0x40 [Sat Apr 27 22:56:09 2020] pagefault_out_of_memory+0x36/0x7b [Sat Apr 27 22:56:09 2020] mm_fault_error+0x90/0x180 [Sat Apr 27 22:56:09 2020] __do_page_fault+0x4a5/0x4d0 [Sat Apr 27 22:56:09 2020] do_page_fault+0x2e/0xe0 [Sat Apr 27 22:56:09 2020] ? page_fault+0x2f/0x50 [Sat Apr 27 22:56:09 2020] page_fault+0x45/0x50 [Sat Apr 27 22:56:09 2020] RIP: 0033:0x558182259cf0 [Sat Apr 27 22:56:09 2020] RSP: 002b:00007fff01a47940 EFLAGS: 00010206 [Sat Apr 27 22:56:09 2020] RAX: 00007fdc18cdf010 RBX: 00007fdc1763a010 RCX: 00007fdc1763a010 [Sat Apr 27 22:56:09 2020] RDX: 00000000016a5000 RSI: 0000000003201000 RDI: 0000000000000000 [Sat Apr 27 22:56:09 2020] RBP: 0000000003200000 R08: 00000000ffffffff R09: 0000000000000000 [Sat Apr 27 22:56:09 2020] R10: 0000000000000022 R11: 0000000000000246 R12: ffffffffffffffff [Sat Apr 27 22:56:09 2020] R13: 0000000000000002 R14: fffffffffffff000 R15: 0000000000001000 [Sat Apr 27 22:56:09 2020] Task in /kubepods/burstable/podbc001ffa-68fc-11e9-92d7-5ef9efd9374c/a2ed67c63e828da3849bf9f506ae2b36b4dac5b402a57f2981c9bdc07b23e672 killed as a result of limit of /kubepods/burstable/podbc001ffa-68fc-11e9-92d7-5ef9efd9374c [Sat Apr 27 22:56:09 2020] memory: usage 125952kB, limit 125952kB, failcnt 3632 [Sat Apr 27 22:56:09 2020] memory+swap: usage 0kB, limit 9007199254740988kB, failcnt 0 [Sat Apr 27 22:56:09 2020] kmem: usage 2352kB, limit 9007199254740988kB, failcnt 0 [Sat Apr 27 22:56:09 2020] Memory cgroup stats for /kubepods/burstable/podbc001ffa-68fc-11e9-92d7-5ef9efd9374c: cache:0KB rss:0KB rss_huge:0KB shmem:0KB mapped_file:0KB dirty:0KB writeback:0KB inactive_anon:0KB active_anon:0KB inactive_file:0KB active_file:0KB unevictable:0KB [Sat Apr 27 22:56:09 2020] Memory cgroup stats for /kubepods/burstable/podbc001ffa-68fc-11e9-92d7-5ef9efd9374c/79fae7c2724ea1b19caa343fed8da3ea84bbe5eb370e5af8a6a94a090d9e4ac2: cache:0KB rss:48KB rss_huge:0KB shmem:0KB mapped_file:0KB dirty:0KB writeback:0KB inactive_anon:0KB active_anon:48KB inactive_file:0KB active_file:0KB unevictable:0KB [Sat Apr 27 22:56:09 2020] Memory cgroup stats for /kubepods/burstable/podbc001ffa-68fc-11e9-92d7-5ef9efd9374c/a2ed67c63e828da3849bf9f506ae2b36b4dac5b402a57f2981c9bdc07b23e672: cache:0KB rss:123552KB rss_huge:0KB shmem:0KB mapped_file:0KB dirty:0KB writeback:0KB inactive_anon:0KB active_anon:123548KB inactive_file:0KB active_file:0KB unevictable:0KB [Sat Apr 27 22:56:09 2020] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name [Sat Apr 27 22:56:09 2020] [25160] 0 25160 256 1 28672 0 -998 pause [Sat Apr 27 22:56:09 2020] [25218] 0 25218 4627 872 77824 0 939 bash [Sat Apr 27 22:56:09 2020] [32307] 0 32307 2060 275 57344 0 939 stress [Sat Apr 27 22:56:09 2020] [32308] 0 32308 27661 24953 253952 0 939 stress [Sat Apr 27 22:56:09 2020] [32331] 0 32331 2060 304 53248 0 939 stress [Sat Apr 27 22:56:09 2020] [32332] 0 32332 14861 5829 102400 0 939 stress [Sat Apr 27 22:56:09 2020] Memory cgroup out of memory: Kill process 32308 (stress) score 1718 or sacrifice child [Sat Apr 27 22:56:09 2020] Killed process 32308 (stress) total-vm:110644kB, anon-rss:99620kB, file-rss:192kB, shmem-rss:0kB [Sat Apr 27 22:56:09 2020] oom_reaper: reaped process 32308 (stress), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kBThe process id 32308 on the host is OOM killed. The more interesting stuff is at the last part of the log,

For this pod, there are processes that are the candidates that the OOM killer would select to kill. The basic process

pause , which holds the network namespaces is having a oom_score_adj value of -998, is guaranteed not be killed. The rest of the processes in the container are all having the oom_score_adj value of 939. We can validate this value based on the formula from the Kubernetes document as below,min(max(2, 1000 - (1000 * memoryRequestBytes) / machineMemoryCapacityBytes), 999)Find out the node allocatable memory by

kubectl describe nodes k3s | grep Allocatable -A 5 Allocatable: cpu: 1 ephemeral-storage: 49255941901 hugepages-1Gi: 0 hugepages-2Mi: 0 memory: 2041888KiThe request memory is by default the same as limit value if its not set. So we have the oom_score_adj value as

1000–123*1024/2041888=938.32, which is close to the value 939 in the syslog. (I am not sure how exact the 939 is obtained in the OOM killer)It’s noticed that all the process in the container has the same oom_score_adj value. The OOM killer will calculate the OOM value based on the memory usage and fine tuned with the oom_score_adj value. Finally it kill the first stress process which use the most of the memory, 100M whose oom_score value is 1718.

Conclusion

Kubernetes manages the Pod memory limit with cgroup and OOM killer. We need to be careful to separate the OS OOM and the pods OOM.Assign Memory Resources to Containers and Pods

how to assign a memory request and a memory limit to a Container. A Container is guaranteed to have as much memory as it requests, but is not allowed to use more memory than its limit.To check the version, enter

kubectl version.

A few of the steps on this page require you to run the metrics-server service in your cluster. If you have the metrics-server running, you can skip those steps.

If you are running Minikube, run the following command to enable the metrics-server:

minikube addons enable metrics-server

metrics.k8s.io), run the following command:kubectl get apiservices

metrics.k8s.io.NAME

v1beta1.metrics.k8s.io

Create a namespace

Create a namespace so that the resources you create in this exercise are isolated from the rest of your cluster.kubectl create namespace mem-example

Specify a memory request and a memory limit

To specify a memory request for a Container, include theresources:requests field in the Container's resource manifest. To specify a memory limit, include resources:limits.In this exercise, you create a Pod that has one Container. The Container has a memory request of 100 MiB and a memory limit of 200 MiB. Here's the configuration file for the Pod:

pods/resource/memory-request-limit.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

namespace: mem-example

spec:

containers:

- name: memory-demo-ctr

image: polinux/stress

resources:

limits:

memory: "200Mi"

requests:

memory: "100Mi"

command: ["stress"]

args: ["--vm", "1", "--vm-bytes", "150M", "--vm-hang", "1"]

args section in the configuration file provides arguments for the Container when it starts. The "--vm-bytes", "150M" arguments tell the Container to attempt to allocate 150 MiB of memory.Create the Pod:

kubectl apply -f https://k8s.io/examples/pods/resource/memory-request-limit.yaml --namespace=mem-example

kubectl get pod memory-demo --namespace=mem-example

kubectl get pod memory-demo --output=yaml --namespace=mem-example

...

resources:

limits:

memory: 200Mi

requests:

memory: 100Mi

...

kubectl top to fetch the metrics for the pod:kubectl top pod memory-demo --namespace=mem-example

NAME CPU(cores) MEMORY(bytes)

memory-demo <something> 162856960

kubectl delete pod memory-demo --namespace=mem-example

Exceed a Container's memory limit

A Container can exceed its memory request if the Node has memory available. But a Container is not allowed to use more than its memory limit. If a Container allocates more memory than its limit, the Container becomes a candidate for termination. If the Container continues to consume memory beyond its limit, the Container is terminated. If a terminated Container can be restarted, the kubelet restarts it, as with any other type of runtime failure.In this exercise, you create a Pod that attempts to allocate more memory than its limit. Here is the configuration file for a Pod that has one Container with a memory request of 50 MiB and a memory limit of 100 MiB:

pods/resource/memory-request-limit-2.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo-2

namespace: mem-example

spec:

containers:

- name: memory-demo-2-ctr

image: polinux/stress

resources:

requests:

memory: "50Mi"

limits:

memory: "100Mi"

command: ["stress"]

args: ["--vm", "1", "--vm-bytes", "250M", "--vm-hang", "1"]

args section of the configuration file, you can see that the Container will attempt to allocate 250 MiB of memory, which is well above the 100 MiB limit.kubectl apply -f https://k8s.io/examples/pods/resource/memory-request-limit-2.yaml --namespace=mem-example

kubectl get pod memory-demo-2 --namespace=mem-example

NAME READY STATUS RESTARTS AGE

memory-demo-2 0/1 OOMKilled 1 24s

kubectl get pod memory-demo-2 --output=yaml --namespace=mem-example

lastState:

terminated:

containerID: docker://65183c1877aaec2e8427bc95609cc52677a454b56fcb24340dbd22917c23b10f

exitCode: 137

finishedAt: 2017-06-20T20:52:19Z

reason: OOMKilled

startedAt: null

kubectl get pod memory-demo-2 --namespace=mem-example

kubectl get pod memory-demo-2 --namespace=mem-example

NAME READY STATUS RESTARTS AGE

memory-demo-2 0/1 OOMKilled 1 37s

kubectl get pod memory-demo-2 --namespace=mem-example

NAME READY STATUS RESTARTS AGE

memory-demo-2 1/1 Running 2 40s

kubectl describe pod memory-demo-2 --namespace=mem-example

... Normal Created Created container with id 66a3a20aa7980e61be4922780bf9d24d1a1d8b7395c09861225b0eba1b1f8511

... Warning BackOff Back-off restarting failed container

kubectl describe nodes

Warning OOMKilling Memory cgroup out of memory: Kill process 4481 (stress) score 1994 or sacrifice child

kubectl delete pod memory-demo-2 --namespace=mem-example

Specify a memory request that is too big for your Nodes

Memory requests and limits are associated with Containers, but it is useful to think of a Pod as having a memory request and limit. The memory request for the Pod is the sum of the memory requests for all the Containers in the Pod. Likewise, the memory limit for the Pod is the sum of the limits of all the Containers in the Pod.Pod scheduling is based on requests. A Pod is scheduled to run on a Node only if the Node has enough available memory to satisfy the Pod's memory request.

In this exercise, you create a Pod that has a memory request so big that it exceeds the capacity of any Node in your cluster. Here is the configuration file for a Pod that has one Container with a request for 1000 GiB of memory, which likely exceeds the capacity of any Node in your cluster.

pods/resource/memory-request-limit-3.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo-3

namespace: mem-example

spec:

containers:

- name: memory-demo-3-ctr

image: polinux/stress

resources:

limits:

memory: "1000Gi"

requests:

memory: "1000Gi"

command: ["stress"]

args: ["--vm", "1", "--vm-bytes", "150M", "--vm-hang", "1"]

kubectl apply -f https://k8s.io/examples/pods/resource/memory-request-limit-3.yaml --namespace=mem-example

kubectl get pod memory-demo-3 --namespace=mem-example

kubectl get pod memory-demo-3 --namespace=mem-example

NAME READY STATUS RESTARTS AGE

memory-demo-3 0/1 Pending 0 25s

kubectl describe pod memory-demo-3 --namespace=mem-example

Events:

... Reason Message

------ -------

... FailedScheduling No nodes are available that match all of the following predicates:: Insufficient memory (3).

Memory units

The memory resource is measured in bytes. You can express memory as a plain integer or a fixed-point integer with one of these suffixes: E, P, T, G, M, K, Ei, Pi, Ti, Gi, Mi, Ki. For example, the following represent approximately the same value:128974848, 129e6, 129M , 123Mi

kubectl delete pod memory-demo-3 --namespace=mem-example

If you do not specify a memory limit

If you do not specify a memory limit for a Container, one of the following situations applies:-

The Container has no upper bound on the amount of memory it uses. The Container could use all of the memory available on the Node where it is running which in turn could invoke the OOM Killer. Further, in case of an OOM Kill, a container with no resource limits will have a greater chance of being killed.

-

The Container is running in a namespace that has a default memory limit, and the Container is automatically assigned the default limit. Cluster administrators can use a LimitRange to specify a default value for the memory limit.

Motivation for memory requests and limits

By configuring memory requests and limits for the Containers that run in your cluster, you can make efficient use of the memory resources available on your cluster's Nodes. By keeping a Pod's memory request low, you give the Pod a good chance of being scheduled. By having a memory limit that is greater than the memory request, you accomplish two things:- The Pod can have bursts of activity where it makes use of memory that happens to be available.

- The amount of memory a Pod can use during a burst is limited to some reasonable amount.

Clean up

Delete your namespace. This deletes all the Pods that you created for this task:kubectl delete namespace mem-example

Tags

memory pods for dementia

pods memory usage

pod memory limit

memory forest pods

kubernetes pods memory usage

kubectl pod memory usage

memory foam airpods pro

kubernetes pods memory

memory foam airpods

shared memory across pods

shared memory between pods

kubernetes shared memory between pods

openshift shared memory between pods

dementia pods dragons den

dementia drinking pods

my memory ear pods

dementia hydration pods

dementia jelly pods

resource memory on pods unknown

resource memory on pods

resource memory on pod (as a percentage of request)

memory usage of pods

dementia rem pods

pods sharing memory

dementia tide pods

kubectl get pods memory usage

oc get pods memory usage

dementia water pods

pod memory limit

change pod memory limit

kubernetes memory limit for pod

memory limit kubernetes pod

wp memory limit

wp-memory limit

kubernetes set pod memory limit

openshift set pod memory limit

memory limit container

docker memory limit container

docker compose memory limit container

kubernetes container memory limit

docker container memory limit default

container 'docker' exceeded memory limit

container 'build' exceeded memory limit

openshift container memory limit

docker limit memory for all containers

container 'docker' exceeded memory limit. bitbucket

container 'build' exceeded memory limit. bitbucket

docker compose container memory limit

docker change container memory limit

docker check container memory limit

check container memory limit

cadvisor container memory limit

memory limit docker container

increase memory limit docker container

set memory limit docker container

change memory limit docker container

ecs container memory limit

docker memory limit for container

set memory limit for container

lxc container memory limit

linux container memory limit

mongodb container memory limit

limit memory of docker container

openshift set container memory limit

docker memory limit per container

prometheus container memory limit

postgres container memory limit

wp memory limit

wp-memory limit

wp memory limit maximum

docker run container memory limit

rancher container memory limit

windows containers memory limit

docker windows container memory limit